Articles

- Page Path

- HOME > Sci Ed > Volume 11(1); 2024 > Article

-

Training Material

ChatGPT for editors: enhancing efficiency and effectiveness -

Yunhee Whang

-

Science Editing 2024;11(1):84-90.

DOI: https://doi.org/10.6087/kcse.332

Published online: February 20, 2024

Compecs Inc, Seoul, Korea

- Correspondence to Yunhee Whang yunhee@compecs.com

Copyright © 2024 Korean Council of Science Editors

This is an open access article distributed under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

- 952 Views

- 52 Download

Abstract

- This tutorial examines how ChatGPT can assist journal editors in improving the efficiency and effectiveness of academic publishing. It highlights ChatGPT’s key characteristics, focusing on the use of “Custom instructions” to generate tailored responses and plugin integration for accessing up-to-date information. The tutorial presents practical advice and illustrative examples to demonstrate how editors can adeptly employ these features to improve their work practices. It covers the intricacies of developing advanced prompts and the application of zero-shot and few-shot prompting techniques across a range of editorial tasks, including literature reviews, training novice reviewers, and improving language quality. Furthermore, the tutorial addresses potential challenges inherent in using ChatGPT, which include a lack of precision and sensitivity to cultural nuances, the presence of biases, and a limited vocabulary in specialized fields, among others. The tutorial concludes by advocating for an integrated approach, combining ChatGPT’s technological advancements with the critical insight of human editors. This approach emphasizes that ChatGPT should be recognized not as a replacement for human judgment and expertise in editorial processes, but as a tool that plays a supportive and complementary role.

- ChatGPT (OpenAI) is a large language model that uses the generative pre-trained transformer (GPT) architecture. Since its introduction in November 2022, ChatGPT has been applied in a variety of fields. Editors and researchers have been exploring how language models like ChatGPT can effectively be utilized in academic publishing and editing to enhance both efficiency and effectiveness [1–4]. This tutorial is designed to assist journal editors in harnessing the full potential of ChatGPT. It covers the core functionalities of ChatGPT, emphasizing the art of crafting effective prompts. The tutorial explores various prompt formulation techniques that could generate optimal responses and provides insights into the model’s capabilities and limitations. It is important to acknowledge that there exists no absolute correct or incorrect way to interact with ChatGPT. Nevertheless, the adoption of specific strategies can significantly improve the efficacy of one’s prompts. This tutorial endeavors to acquaint editors with these methods, enabling them to employ ChatGPT more effectively in their editorial duties.

Introduction

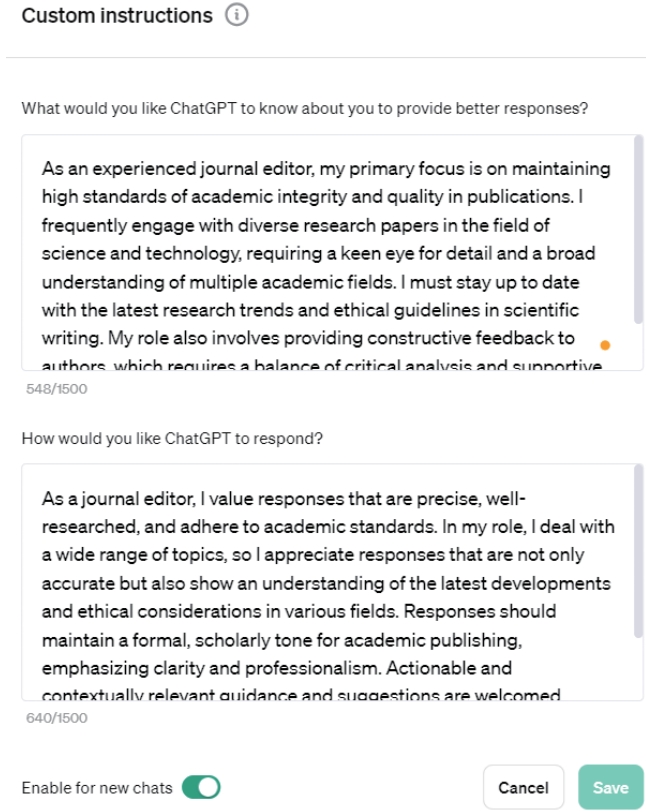

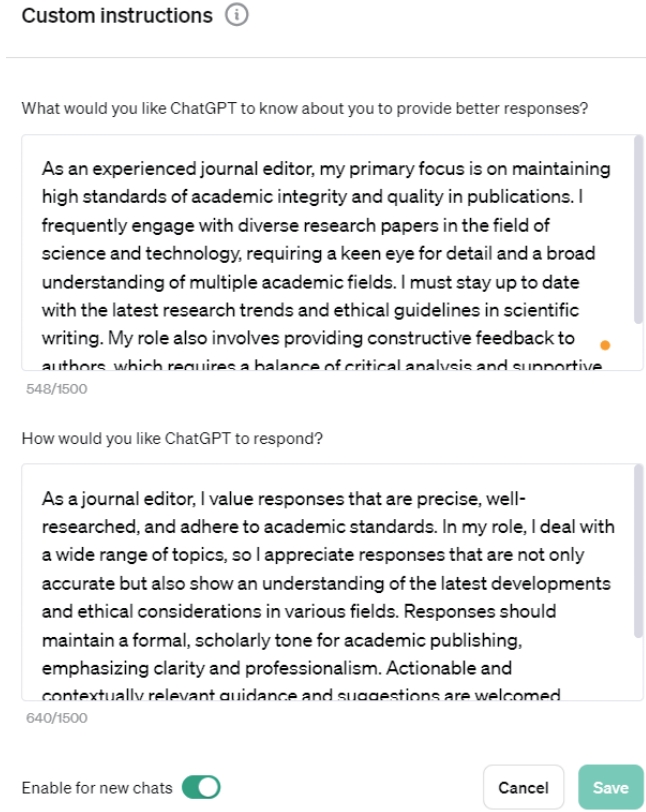

- Use “Custom instructions”

- By selecting the username at the bottom left of the interface, editors will find the “Custom instructions” option, situated beneath the “My Plan” and “My GPTs” menus. “Custom instructions” allow editors to add “preferences” or requirements that they would like ChatGPT to consider when generating its responses [5,6]. This feature enables ChatGPT to tailor its responses more effectively to their needs. To activate “Custom instructions,” two key questions must be addressed: “What would you like ChatGPT to know about you to provide better responses?” and “How would you like ChatGPT to respond?” (Fig. 1).

- In response to the first question, “What would you like ChatGPT to know about you to provide better responses?”, editors have the opportunity to share personal details. This may include their profession, specific goals, interests, or particular challenges they are facing. Alternatively, editors can assign a role they envisage for ChatGPT, or a combination of both personal and role-based information. This input is crucial for the model to customize its responses to suit the editor’s unique context and informational needs. For instance, a newly appointed journal editor has a comprehensive understanding of general editorial processes. In such a scenario, it would be beneficial to identify themselves as an “experienced journal editor.” This would prevent ChatGPT from providing basic editorial explanations that are already familiar to the editor, thereby increasing the relevance of the guidance it offers. Similarly, if the editor is proficient in using R, indicating this can help tailor the depth and nature of technical explanations in the responses they receive.

- For the second question, “How would you like ChatGPT to respond?”, it is beneficial to provide details including the preferred tone (e.g., formal or conversational), desired response length (e.g., detailed explanations or brief summaries), and specific requests for actionable advice or suggestions. “Custom instructions” are often overlooked by users, but they can significantly enhance both the efficiency and effectiveness of the content generated. Additionally, they have a significant impact on the style and appropriateness of the language used in the response.

- To fully harness ChatGPT’s capabilities, “Custom instructions” need to be thoughtfully adapted to each specific context or situation. OpenAI’s guidance on “Custom instructions” for ChatGPT highlights that each response has a 1,500-character limit [5]. In the absence of precise “Custom instructions,” ChatGPT tends to deliver more generalized information, which can lead to inconsistencies in output quality. Fig. 2 illustrates the difference in responses with and without the use of “Custom instructions.”

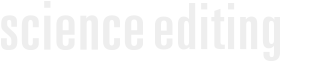

- Use plugins

- Enhancing ChatGPT with plugins markedly expands its functionality and usefulness, especially for researchers and journal editors. ChatGPT’s base knowledge is limited to data up to April 2023. However, integrating plugins enables access to current, real-time information, surpassing the inherent limitations of ChatGPT’s dataset. Popular plugins in the academic community include Scholar AI, PubMed, Consensus Search, and AskyourPDF among others. Notably, a maximum of three plugins can be activated simultaneously.

- The full potential of these plugins is realized when they are integrated with “Custom instructions.” This tailored approach ensures that the results are precisely aligned with the specific context and requirements of the user. For example, designating a “researcher” or “journal editor” role in the “Custom instructions” significantly influences the nature of the output. In leveraging these role-specific cues, ChatGPT, in conjunction with the activated plugins, provides information that is not just up to date, but also highly relevant to the distinct needs of researchers and journal editors. This strategic customization helps users receive information that is both current and directly applicable to their specific professional needs.

Key Features of ChatGPT

- Guidelines

- Crafting a precise question or command is essential to obtaining the desired response from ChatGPT. While there is considerable flexibility in how one may interact with AI, following specific guidelines can substantially improve the accuracy and effectiveness of the prompts. This section outlines key principles (adapted from ChatGPT) and examples for generating prompts.

• Be specific and direct: Clearly state what you want the text to achieve. The more detailed you are in the input, the better the output tends to be. For example, “Write a concise summary of the latest research on X, suitable for a review article.”

• Define the style and tone: Although the desired style and tone might have been indicated in the “Custom instructions,” providing more detailed style and tone is helpful. For instance, “Compose a persuasive argument on the importance of X, using an optimistic and engaging tone.”

• Set structure (or format) expectations: If you have a particular structure (or format) in mind, such as a paragraph or bullet points, specify this in the prompt. You might say, “Draft a structured report on X, including an introduction, three key points, and a conclusion.”

• Request examples and evidence: Ask for the inclusion of examples, data, or quotations to improve clarity and authority. For example, “Explain the concept of herd immunity and support the explanation with real-world examples and statistical evidence.”

• Indicate the audience: Knowing the audience helps tailor the language complexity and terminology. For instance, “Explain X for experienced journal editors.”

• Limit word count: If brevity is important, set a word limit. For example, “In no more than 100 words, summarize the key findings of the recent study on X.”

• Ask for a specific perspective or angle: This can guide the content’s direction. For example, “From the perspective of a cardiovascular surgeon, discuss X.”

- Types of prompting techniques

- In this section, we explore two pivotal prompting techniques for AI language models: zero-shot and few-shot prompting [7,8]. These methods are crucial for editors looking to optimize their prompt crafting skills for effective interactions with AI systems like ChatGPT. Table 1 presents a comparison highlighting the distinct characteristics of each technique.

- This comparative analysis is designed to assist editors in discerning the most suitable prompting approach for varying editorial contexts. These techniques can significantly enhance the efficiency and effectiveness of editorial processes in academic publishing. Specific examples of how these techniques can be applied are presented in the following section, which discusses the use of ChatGPT in the editorial process.

Write Effective Prompts

- The integration of ChatGPT into the editorial workflow offers significant benefits. First, it can be used to conduct preliminary literature reviews, identifying key themes, methodologies, and gaps in the literature. Fig. 3 illustrates this process, utilizing the zero-shot prompting technique and incorporating plugins like Scholar AI. These plugins are particularly valuable as they access recent information, supplementing ChatGPT’s knowledge base, which is current until April 2023. Editors can obtain better results by combining “Custom instructions,” proper prompting techniques, and plugins.

- Second, ChatGPT can serve as a training tool for novice editors and reviewers, guiding them to provide constructive, clear, and professional feedback. Consider a scenario where a journal editor aims to improve the quality of peer review comments from inexperienced reviewers. The editor could employ the few-shot prompting technique with ChatGPT. Initially, the editor selects two to three exemplary peer reviews, showcasing attributes like constructive feedback, clarity, thoroughness, and professionalism. The novice reviewers then use these models to train ChatGPT, alongside specific instructions from the editor. For instance, they might say, “Here are three high-quality peer reviews, each exemplary in certain aspects. Use these as benchmarks to refine my draft comments.” The novice reviewers input their initial comments, which might be unrefined or overly critical, into ChatGPT. Guided by the model reviews, ChatGPT then revises the comments to align with the demonstrated standards, improving their structure, language, and tone while preserving the core intent and critical observations. This method streamlines and elevates the editorial process.

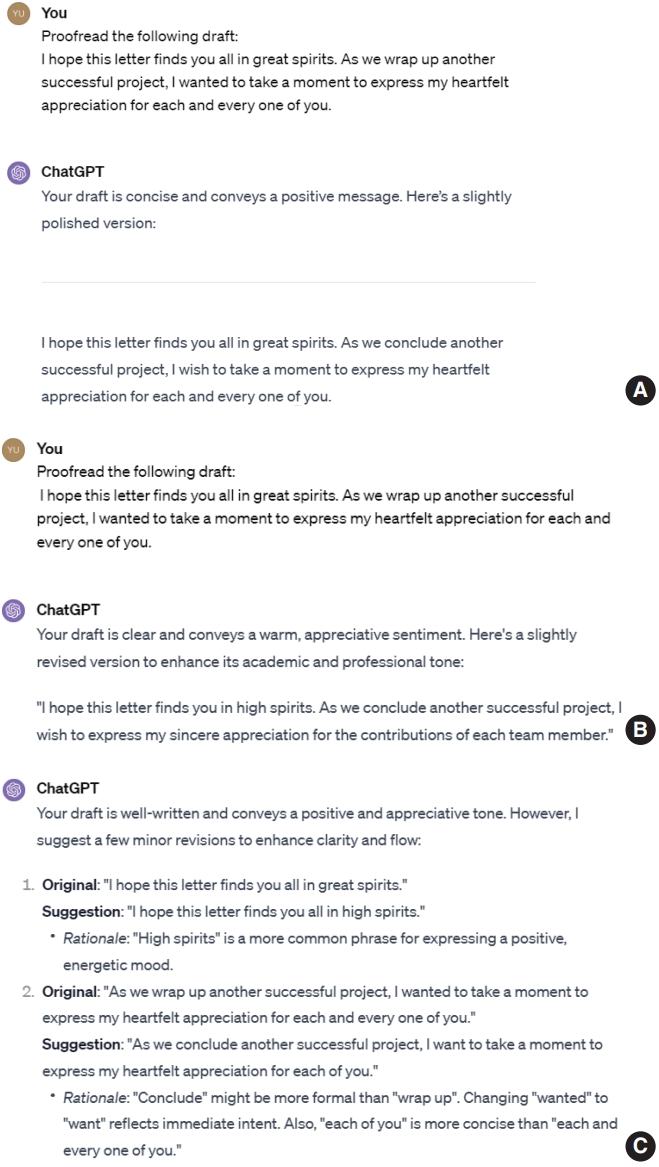

- Third, ChatGPT provides valuable assistance in language editing. This is especially beneficial for non-native English-speaking editors, helping them ensure clarity and coherence in their editorial work. It effectively bridges communication gaps within academic discourse, maintaining the standards of international academic publications. Below are examples of simple editing-related prompts: “Clarify the text below and correct any spelling, grammar and punctuation errors if there are any,” “Improve flow, academic tone, and overall quality,” and “Improve language style, flow, and readability.” If editors want to double-check the changes, they may add an additional prompt—”Bold all changes you make.” It is crucial to recognize that enabling “Custom instructions” significantly influences each output. For instance, Fig. 4A displays a basic editing response from ChatGPT without “Custom instructions” activated. Fig. 4B and 4C, using the same prompt, “Proofread the following draft,” demonstrates how “Custom instructions” modify ChatGPT’s responses. Notably, Fig. 4C shows that when “Custom instructions” request explanations, ChatGPT provides detailed rationales for each edit. This highlights the fact that effective prompt crafting, while important, is not the sole factor in optimizing results. The incorporation of well-designed “Custom instructions” significantly increases ChatGPT’s utility, producing results that are more closely aligned with the specific requirements of editors.

- Some other example prompts that can be effectively utilized in the editorial process are as follows:

• Does this research paper on X adhere to the standard ethical guidelines for scientific writing?

• What are the current trends in X, and how do they compare to trends from 5 years ago?

• What are the best practices for conducting a blind peer review for a scientific journal?

• How might developments in X influence future research in Y?

• What are the ethical considerations when publishing research involving human subjects?

• What are the guidelines for managing conflicts of interest in academic publishing?

How can Editorial Processes Benefit from ChatGPT?

- Integrating ChatGPT into the editorial process, particularly for non-native English-speaking editors, presents unique challenges. One significant challenge lies in the tool’s inherent dependency on its training data, which can be a source of biases and inaccuracies in its responses. This limitation is especially problematic for scientific writing, which requires a high degree of precision and accuracy. In addition, ChatGPT may not always capture the dynamic and culturally diverse nuances of academic writing. Its responses may miss the subtleties and context-specific intricacies that are vital in scholarly communication. For example, in medical research, cultural factors can significantly influence both the research itself and its interpretation, and an incomplete understanding of these issues can lead to oversimplified or even inaccurate representations of the subject matter. This limitation heightens the risk of misunderstanding or misinterpretations in editing, with the potential to significantly compromise the authenticity and integrity of academic work.

- Furthermore, ChatGPT can sometimes produce responses with varying degrees of consistency, particularly when dealing with long or complex texts that require a uniform style and specialized vocabulary. In academic writing, maintaining a consistent style and tone is vital for the clarity and readability of scientific papers. Additionally, ChatGPT may not always correctly deploy the specific technical vocabulary or jargon used in certain scientific fields. This limitation can lead to a gap in communication, especially when dealing with highly specialized or emerging areas of research where precise terminology is the key. Editors may also find some phrases that tend to be overused during interactions with ChatGPT. Users have been sharing lists of overused phrases in discussions on platforms such as Reddit [9] and Medium [10]. When reviewing or editing content, it is essential to watch out for these phrases and consider replacing them with more diverse and contextually appropriate language to improve the overall quality of the text and avoid clichés.

Challenges and Limitations of Using ChatGPT

- ChatGPT is a sophisticated generative language model and offers a wide array of capabilities that extend beyond simple text generation. The key to harnessing its full potential lies in understanding and effectively utilizing its main features, including “Custom instructions,” which allow assigning roles and control the output. Rather than merely replicating prompts used by others, it is crucial for editors to comprehend how combining “Custom instructions” with thoughtfully crafted prompts can yield customized and superior results. Although ChatGPT demonstrates proficiency in broad language tasks, its effectiveness in highly specific or specialized domains may encounter constraints. Therefore, while ChatGPT can substantially assist with editorial tasks, it should be used as a complement to, rather than a replacement for, human expertise and judgment.

Conclusion

-

Conflict of Interest

No potential conflict of interest relevant to this article was reported.

-

Funding

The author received no financial support for this work.

-

Data Availability

Data sharing is not applicable to this article as no new data were created or analyzed.

Notes

Supplementary Materials

- 1. Victor BG, Sokol RL, Goldkind L, Perron BR. Recommendations for social work researchers and journal editors on the use of generative AI and large language models. J Soc Soc Work Res 2023;14:563-77.https://doi.org/10.1086/726021. Article

- 2. Miller K, Gunn E, Cochran A, et al. Use of large language models and artificial intelligence tools in works submitted to Journal of Clinical Oncology. J Clin Oncol 2023;41:3480-1.https://doi.org/10.1200/JCO.23.00819. ArticlePubMed

- 3. Hamm B, Marti-Bonmati L, Sardanelli F. ESR Journals editors’ joint statement on guidelines for the use of large language models by authors, reviewers, and editors. Eur Radiol; 2024 Jan. 11. [Epub]. https://doi.org/10.1007/s00330-023-10511-8. Article

- 4. Sage Publications. Using AI in peer review and publishing [Internet]. Sage Publications; 2024 [cited 2024 Jan 20]. Available from: https://us.sagepub.com/en-us/nam/using-ai-inpeer-review-and-publishing.

- 5. OpenAI. Custom instructions for ChatGPT [Internet]. OpenAI; 2024 [cited 2024 Jan 20]. Available from: https://help.openai.com/en/articles/8096356-custom-instructionsfor-chatgpt.

- 6. OpenAI. Custom instructions for ChatGPT [Internet]. OpenAI; 2023 [cited 2024 Jan 20]. Available from: https://openai.com/blog/custom-instructions-for-chatgpt.

- 7. Tam A. What are zero-shot prompting and few-shot prompting [Internet]. Machine Learning Mastery; 2023 [cited 2024 Jan 20]. Available from: https://machinelearningmastery.com/what-are-zero-shot-prompting-and-few-shot-prompting/.

- 8. Weng L. Prompt engineering [Internet]. Lil’Log; 2023 [cited 2024 Jan 20]. Available from: https://lilianweng.github.io/posts/2023-03-15-prompt-engineering/.

- 9. Reddit. Overused ChatGPT terms: add to my list! [Internet]. Reddit; 2023 [cited 2024 Jan 27]. Available from: https://www.reddit.com/r/ChatGPTPro/comments/163ndbh/overused_chatgpt_terms_add_to_my_list/.

- 10. Daniel. How to identify AI writings: 25 phrases overused by ChatGPT [Internet]. Medium; 2023 [cited 2024 Jan 27]. Available from: https://medium.com/@onlydaniel/how-to-identify-ai-writings-25-phrases-overused-by-chatgpt-a4d4d7896f6b.

References

Figure & Data

References

Citations

KCSE

KCSE

PubReader

PubReader ePub Link

ePub Link Cite

Cite