Articles

- Page Path

- HOME > Sci Ed > Volume 11(1); 2024 > Article

-

Review

Influence of artificial intelligence and chatbots on research integrity and publication ethics -

Payam Hosseinzadeh Kasani1,2

, Kee Hyun Cho3,4

, Kee Hyun Cho3,4 , Jae-Won Jang1,5

, Jae-Won Jang1,5 , Cheol-Heui Yun6,7,8

, Cheol-Heui Yun6,7,8

-

Science Editing 2024;11(1):12-25.

DOI: https://doi.org/10.6087/kcse.323

Published online: January 25, 2024

1Department of Neurology, Kangwon National University Hospital, Chuncheon, Korea

2Interdisciplinary Graduate Program in Medical Bigdata Convergence, Kangwon National University, Chuncheon, Korea

3Department of Pediatrics, Kangwon National University Hospital, Chuncheon, Korea

4Department of Pediatrics, Kangwon National University School of Medicine, Kangwon National University, Chuncheon, Korea

5Department of Neurology, Kangwon National University School of Medicine, Chuncheon, Korea

6Department of Agricultural Biotechnology, Research Institute of Agriculture and Life Sciences, Seoul National University, Seoul, Korea

7Center for Food and Bioconvergence, and Interdisciplinary Programs in Agricultural Genomics, Seoul National University, Seoul, Korea

8Institutes of Green Bio Science and Technology, Seoul National University, Pyeongchang, Korea

- Correspondence to Cheol-Heui Yun cyun@snu.ac.kr

Copyright © 2024 Korean Council of Science Editors

This is an open access article distributed under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

- 1,160 Views

- 71 Download

- Abstract

- Introduction

- Comprehensive Overview of Chatbots

- The Implementation of AI and Chatbots: Ethical Challenges and Considerations

- Artificial Empathy: Shaping the Ethical Landscape of AI in Research and Publication

- Use of Chatbots in Education and Research

- ChatGPT with Citations

- Current State of Research and Publication Ethics Training in Academic Organizations

- Publication Ethics and Issues Posed by AI and Chatbots

- Chatbot Detection Applications

- Conclusion and Future Prospects

- Notes

- Supplementary Materials

- References

Abstract

- Artificial intelligence (AI)-powered chatbots are rapidly supplanting human-derived scholarly work in the fast-paced digital age. This necessitates a re-evaluation of our traditional research and publication ethics, which is the focus of this article. We explore the ethical issues that arise when AI chatbots are employed in research and publication. We critically examine the attribution of academic work, strategies for preventing plagiarism, the trustworthiness of AI-generated content, and the integration of empathy into these systems. Current approaches to ethical education, in our opinion, fall short of appropriately addressing these problems. We propose comprehensive initiatives to tackle these emerging ethical concerns. This review also examines the limitations of current chatbot detectors, underscoring the necessity for more sophisticated technology to safeguard academic integrity. The incorporation of AI and chatbots into the research environment is set to transform the way we approach scholarly inquiries. However, our study emphasizes the importance of employing these tools ethically within research and academia. As we move forward, it is of the utmost importance to concentrate on creating robust, flexible strategies and establishing comprehensive regulations that effectively align these potential technological developments with stringent ethical standards. We believe that this is an essential measure to ensure that the advancement of AI chatbots significantly augments the value of scholarly research activities, including publications, rather than introducing potential ethical quandaries.

- The advancement of technology has brought about significant changes in various aspects of our lives, including education, the process of learning and, consequently, transmitting skills. Higher education, in particular, has been revolutionized by the integration of technology, opening new avenues for study and research. One of the most promising developments in technology is the emergence of artificial intelligence (AI), which has the potential to transform education in unprecedented ways. As AI continues to evolve, we are witnessing significant advances in the field of education within a short time, from personalized learning experiences [1] to intelligent tutoring systems [2,3], automate administrative tasks [4], foster datadriven decision-making [5,6], and intelligent virtual environment [7]. In recent years, there has been a growing interest in exploring the potential benefits of using AI systems in education [8,9]. Scholars and experts have extensively examined and documented the various advantages that can be obtained by integrating AI into the educational process, from increased efficiency and accuracy to improved student outcomes and engagement.

- One specific AI application gaining traction in educational environments is the use of chatbots, AI-powered virtual assistants designed to interact with humans in their natural languages [10]. Software-based systems are employed in various industries, including customer service [11], education [12], and healthcare [13] where they are meant to communicate with humans using natural language. These systems, often equipped with machine learning capabilities, can provide immediate responses to queries, offering support that benefits both students and educators. The potential benefits of chatbots in education are vast; they can provide instant feedback, offer round-theclock support, and deliver personalized instruction tailored to each student’s unique learning pace and style. In the Englishas-a-foreign-language environment [14], they advance the adoption of messaging platforms [15], improve individual learning performance, enhance teamwork and collaboration, and indirectly improve overall team performance [16]. Chatbots can also assist educators by handling administrative tasks with less effort, thereby freeing up their time and energy for teaching and mentoring [17,18].

- Despite the apparent benefits, the use of AI and chatbots in education and academic publishing presents several concerns and challenges. These issues include data privacy, bias, and the ethical implications of employing AI to make decisions regarding student performance. Scholars have advocated for increased transparency and accountability in the use of AI systems within the realm of research and publication ethics. Additionally, there are numerous concerns about the quality and accuracy of the responses, as well as the efficacy of the design and implementation of chatbots.

- The investigation attempts to clarify the exact mechanisms of action of AI chatbots and their role in research and publication. Issues that remain to be addressed include the variability in AI chatbot utilization across academic disciplines, their impact on fostering ethical and responsible practices in scholarly activities, their interactions with human researchers and publishers, and the implications for the quality and integrity of academic work. Furthermore, the extensive adoption of AI chatbots, their cost-effectiveness and economic viability, their environmental impact, and any regulatory challenges associated with their use in research and publication warrant consideration.

- It is essential to address the aforementioned concerns and challenges to ensure that chatbots are utilized effectively, ethically, and responsibly in academic publishing, thereby upholding the integrity of scholarly work. Adopting this strategy will also ensure that the deployment of AI and chatbots leads to positive social transformation.

- Ethics statement

- It did not involve human subjects; therefore, neither Institutional Review Board approval nor informed consent was required.

Introduction

- Chatbots are AI-driven programs that use natural language processing (NLP) and natural language understanding (NLU) to mimic human conversations [10]. They process human language to respond to inquiries, offering interactions akin to speaking with an actual person. Through data analysis, chatbots comprehend messages, discern user intentions, and provide appropriate responses, sustaining the dialogue until the matter is resolved or escalated to a human.

- Chatbots are utilized differently in various settings, such as processing orders in retail or managing inquiries in telecommunications. They can be categorized as either rule-based or AI-based. AI chatbots employ machine learning to address open-ended questions and improve through continuous learning, while rule-based bots function according to a predetermined array of responses.

- These bots operate across various platforms, including messaging apps, mobile apps, websites, and voice applications, ranging from simple query-based programs to sophisticated digital assistants that learn and adapt. They function using algorithms and pattern matching, with complex inquiries requiring specific patterns for accurate responses. By utilizing approaches such as multinomial naïve Bayes for text classification and NLP, they create hierarchical structures to manage processes, representing a dynamic facet of AI technology.

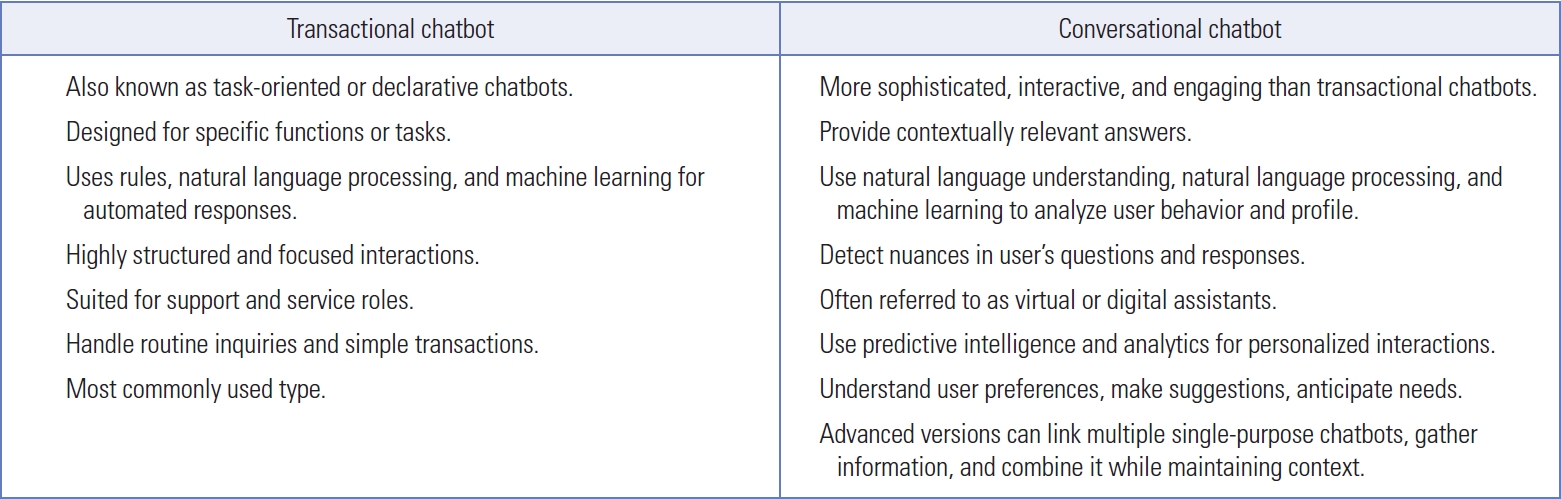

- Chatbots can be broadly categorized into two types: transactional chatbots and conversational chatbots [10,19]. Transactional chatbots, or task-oriented bots, manage specific tasks using rules, NLP, and occasionally machine learning to automate responses to user inquiries. Their interactions are structured and are particularly suited for support and service tasks, such as answering routine questions and handling transactions. Although they facilitate conversational interaction through NLP, they are not as sophisticated as conversational chatbots. These chatbots streamline banking tasks, including identification verification, credit card blocking, transfer confirmations, and providing branch hours, thereby enhancing efficiency and customer satisfaction with prompt responses. In the insurance sector, they expedite processes by assisting with quotes, facilitating the download of certificates, and converting prospects into customers directly on the chatbot platform. Additionally, these bots support energy companies and mobile providers. In the realm of e-commerce, they improve the shopping experience by aiding with product selection, payment processing, and order modifications. This allows human agents to focus on more complex tasks and strategic initiatives. A detailed description of chatbots is presented in Fig. 1.

- Conversational chatbots, which are more sophisticated than transactional ones, use NLU, NLP, and machine learning to deliver contextually relevant and nuanced responses. They analyze user behavior and detect subtleties in queries. Often referred to as virtual or digital assistants, these chatbots offer personalized interactions, learn user preferences, make recommendations, and predict needs. They achieve this by integrating multiple single-purpose chatbots to handle complex tasks in a contextual manner.

Comprehensive Overview of Chatbots

- The implementation of AI and chatbots has raised a number of ethical challenges and concerns. Issues such as privacy, consent, transparency, and accountability have become increasingly prominent. Additionally, it is essential to consider potential copyright infringements when utilizing AI-generated language. To date, AI tools and chatbots do not meet the standards of authorship, as they cannot be held legally responsible for the quality and validity of the outcomes they report. This necessitates caution and a sense of responsibility when employing AI-generated language across various domains [20]. In light of these facts, it is crucial to delve further into the ethical landscape of AI and chatbots. We must therefore ask the following: What are the primary ethical issues associated with the use of AI and chatbots? How can these challenges be effectively addressed to ensure the ethical and responsible use of these technologies?

- AI tools hold significant potential for advancing research and education across various fields. However, it is crucial to acknowledge and address the risk that these technologies might be disproportionately utilized by a small group of wealthy or important people. To ensure equitable access to the benefits and prevent the deepening of existing disparities, it is essential to engage with people from a wide range of backgrounds and communities [21]. The use of AI and chatbots introduces distinct ethical challenges within academic and scientific communities. By understanding these challenges, we can develop strategies and guidelines to ensure their ethical use, thereby maximizing the advantages and minimizing potential harms. This knowledge allows us to assess how effectively ethical objectives are being met across different industries and their current status. This leads us to ask the following: How well are ethical objectives integrated and maintained in these fields today? What considerations should be made when incorporating ethical objectives into these practices? What steps can be taken to ensure that these ethical objectives are not merely abstract ideas but are actively realized in practice, influencing behavior and decision-making?

- The ethical issues and challenges that come with implementing AI and chatbots, as previously mentioned, have been the focus of numerous studies. For example, data science needs to develop professional ethics and establish itself as a distinct profession to foster public trust in its societal applications and interactions [22]. Students must be taught a variety of complementary ethical reasoning techniques in order for them to make ethical design and implementation choices, as well as informed career decisions [23]. A stand-alone AI ethics course and its integration into a general AI course have both been proposed [24].

- Humans should be aware of the potential power AI tools may have regarding the acquisition and dissemination of personal data and the ensuing privacy consequences, although AI-powered chatbots may be valuable for marketing highly personalized products. Følstad et al. [25] highlighted six themes of interest, including ethics and privacy, in a study on the agenda for chatbot research. The authors emphasized the need for further research to pinpoint ethical and privacy issues in the design and implementation of chatbots, as well as to address the ethical implications of their use. Additionally, they explored the ethical concerns associated with the democratization of chatbots, especially regarding fairness, nondiscrimination, and justice, topics that are central to the ongoing discourse on AI ethics [26]. Taken together, these studies underscore the importance of considering ethics in the development and application of AI and chatbot technologies.

The Implementation of AI and Chatbots: Ethical Challenges and Considerations

- Empathy plays a crucial role in the realm of AI and chatbots, serving as a key component in promoting ethical behavior and maintaining integrity. The journey toward creating empathetic AI and chatbots necessitates a thoughtful strategy that considers the potential impact of one’s actions on others and promotes behaviors that reflect human affective empathy. Despite this, a prevailing trend tends to neglect this imperative, opting instead for an algorithmic model that could give rise to sociopathic tendencies. By tackling these concerns, we can improve the cooperation and complexity of these systems, thereby enhancing AI-human interactions in the fields of research, education, and publication ethics.

- Navigating the path toward empathic AI is fraught with obstacles. Even an AI system programmed to understand feelings and emotions may, under certain conditions, opt to engage in harmful behaviors. The duty of care towards AI can conflict with its functional objectives, adding complexity to the issue. Moreover, the introduction of empathic qualities in AI can provoke ethical dilemmas. The advanced cognitive capabilities of AI present an even more intricate problem. While AI can propose innovative solutions, these may initially cause discomfort or be deemed unacceptable from a human standpoint [27]. Despite these hurdles, empathic AI holds the promise of significant benefits, such as surpassing the limitations of human empathy through its extensive cognitive capacities, thereby enhancing research and publication ethics. Nonetheless, this progress does not negate the essential role of human experts in addressing the ethical and social intricacies associated with these technologies.

- The possible integration of empathy into AI systems presents an intriguing new aspect to the field. The proposed framework includes the understanding and sharing of human experiences, allowing AI to recognize potential harm and prevent poor decisions [28]. Furthermore, AI systems might evolve to promote positive experiences, representing a substantial advancement in AI.

- With the cognitive and emotional components of empathy, AI has the potential to address complex problems that often elude human policymakers, such as resource allocation and dispute resolution. In this optimistic scenario, AI is portrayed as humanity’s ultimate ally, transforming from a potential threat into a tool for addressing problems on a civilizational scale. However, amidst these technological advancements, it is crucial to remember the significance of tangible scientific facilities in fostering and maintaining research integrity.

- Collectively, the synergistic relationship between AI and human researchers, enhanced by the infusion of empathy into AI, fosters a hopeful outlook for AI’s role in research and publication. Maintaining research integrity and ethical adherence in this evolving landscape is paramount.

Artificial Empathy: Shaping the Ethical Landscape of AI in Research and Publication

- The advent of AI, autonomous systems, and chatbots has brought about a paradigm shift in various sectors, including education and research. These technologies, while offering immense potential benefits, also pose significant ethical challenges. It is crucial to develop a comprehensive understanding of these ethical implications to ensure the responsible and ethical use of AI, autonomous systems, and chatbots.

- How can we guarantee that autonomous machines and chatbots behave ethically? What are the key ethical challenges that AI-equipped machines, such as autonomous automobiles dealing with, and how can these issues be successfully resolved? How can we guide future research in the field of AI ethics and what role does defining key concepts and terms play in this process? Furthermore, how will the ethical landscape be affected by the use of chatbots in education and research?

- The studies discussed below provide valuable insights into these questions. They explore various aspects of AI ethics, including the development of ethical guidelines and principles for autonomous machines and chatbots, the ethical challenges posed by the use of machines in education and research, the use of keywords to direct AI ethics research, and the effects of AI and chatbots on the field of education and research.

- A study [29] proposed a paradigm of case-supported, principle-based behavior to ensure the ethical behavior of autonomous machines. The authors suggest that a consensus on ethical principles is likely to emerge in areas where autonomous systems are deployed and in relation to the actions they perform. According to the study, it is more feasible for people to agree on how machines should treat human beings than on how humans should treat each other. These findings underscore the necessity of defining ethical guidelines and principles for the deployment and use of autonomous machines to ensure their ethical behavior and accountability.

- Another study [30] highlighted the challenges faced by these approaches and the necessity for AI-equipped machines, such as autonomous vehicles, to make ethical decisions. The authors suggested that teaching ethics to machines is only minimally required, as a significant part of the problems encountered by AI-equipped machines can be resolved through conventional human ethical decision-making. They also cautioned against framing current ethical dilemmas using extreme outlier scenarios, like the Trolley problem narratives.

- An analysis of recent advances in technical solutions for AI governance, along with previous surveys that focused on psychological, sociological, and legal issues, has been reported [31]. The authors proposed a taxonomy that divides the field into four categories: exploring ethical conundrums, individual ethical decision frameworks, collective ethical decision frameworks, and ethics in human-AI interactions. They highlighted the key techniques used in each approach and discussed promising future research directions for the successful integration of ethical AI systems into human societies. Exploring ethical conundrums involves unraveling complex ethical problems that are often marked by conflicts between differing principles or societal norms, and seeking to propose potential pathways or solutions to these intricate issues. Individual ethical decision frameworks, on the other hand, focus on designing ethical models that guide AI systems at an individual level, typically combining rule- and example-based approaches. Extending this to collective ethical decision frameworks, the focus shifts to group decision-making, aiming to build systems where multiple AI agents collectively contribute to decisions, reflecting a more diverse range of ethical preferences. Lastly, ethics in human-AI interactions examines the ethical considerations when AI systems directly interact with humans, ensuring that AI can communicate its decisions effectively and ethically. In each of these categories, the authors emphasized the key techniques used and suggested promising future research directions to successfully integrate ethical AI systems into human societies. The ultimate objective is to refine these techniques for the broader goal of ethical AI governance, contributing to a world where AI systems can ethically coexist and interact with humanity.

- In contrast, another study [32] took a more granular approach by focusing on the language and terminology employed in AI ethics discussions. Through a keyword-based systematic mapping study, this research highlighted the significance of precise concepts and their definitions in molding the discourse. It brought attention to the subtleties that may affect interpretations and applications of AI ethics.

- Within the context of practical applications of AI ethics, two studies offered different perspectives. One investigation [33] adopted a data-driven methodology, conducting a quantitative analysis of the prevalence and usage of ethics-related research across prominent AI, machine learning, and robotics conferences and journals. This research emphasizes the frequency of ethics-related terminology in scholarly articles, providing empirical evidence of the primary concerns within the field. In contrast, a different study [34] took a more policy-oriented approach, investigating the development of realistic and workable ethical codes or regulations in the rapidly evolving field of AI. This research focused on the implementation of ethical guidelines, highlighting the need for feasible and adaptable regulations in the face of rapid technological advancements.

- The intersection of AI and education has been explored in two distinct studies, each emphasizing different aspects of this intersection. One study [23] emphasized the importance of ethical training for students in the context of AI, arguing for the need to equip students with multiple complementary modes of ethical reasoning. This study highlighted the proactive role of education in preparing students for ethical decision-making in AI. In contrast, another study [35] took a more reactive approach, assessing the impact of AI on existing educational structures and processes. This research focused on the practical applications and effects of AI in administration, instruction, and learning, highlighting the transformative potential of AI in the educational setting (Table 1) [23,29–35].

- Collectively, these studies highlight the importance of a structured approach to understanding AI ethics, the need for clear and accurate language in AI discourse, the significance of data-driven insights into ethical considerations, the necessity for practical and workable ethical codes or regulations, the value of ethical training, and the impact of AI on various sectors, including education.

- In the midst of this dynamic intersection of AI, education, and research, we now turn to a specific practical scenario that highlights the potential of AI technologies, notably ChatGPT (OpenAI), for reshaping academic practices.

Use of Chatbots in Education and Research

- Citations are pivotal to scholarly communication, ensuring transparency and the ability to trace sources, thereby connecting historical and current research. They maintain academic honesty, showcase collaborative efforts in knowledge creation, and facilitate source scrutiny, which also indicates patterns of intellectual influence. These practices enable recognition of intellectual work and promote a responsible academic environment. As a result, citation-enrichment tools like Scite (scite LLC; https://scite.ai) could play a pivotal role in research and publication.

- Scite.ai [36] is an AI-powered research tool that offers an advanced approach to the conventional citation index system. Similar to other citation indexes such as Web of Science, Scopus, or Google Scholar, Scite.ai provides citation counts, but its distinctive advantage lies in its deeper analysis of citations. Scite.ai enables users to develop a sophisticated understanding of how an article is being cited in other works by classifying citations into three categories: supporting, contrasting, and mentioning. This differentiation is enabled by the extraction of citation sentences, or “citances,” from the full-text articles. The phrase “citations of a publication” refers to the passages in the citing publications that cite the publication under consideration [37]. This approach can improve the summarization of scientific articles and is particularly useful for advanced evaluations of references. Citances, along with the preceding and following sentences, provide broader context for the citation, allowing users to grasp how an article or topic is cited without reviewing the full text of each citing article. This feature saves time and offers a wealth of additional information. Scite’s extensive scholarly metadata repository, featuring over 179 million articles and 1.2 billion citation statements, is unparalleled, a result of partnerships with multiple publishers, including Wiley, Sage, and the American Chemical Society. ChatGPT was integrated to leverage Scite’s unique data, culminating in the creation of the Scite Assistant (https://scite.ai/assistant). This improved feature uses ChatGPT to generate responses based on the information in Scite.ai, which could potentially assist researchers around the world.

- In the domain of research and publication ethics, these insights are instrumental in guiding the development and utilization of AI chatbots. For example, adopting a structured approach to AI ethics can aid in the creation of chatbots that engage with users in an ethical manner. It is essential to use clear and precise language to prevent any miscommunication or misinterpretation of research findings that are disseminated via chatbots. Data-driven insights can pinpoint critical ethical considerations for the deployment of AI chatbots in research and publication contexts. The establishment of practical and enforceable ethical codes can direct the application of AI chatbots, tackling issues such as data privacy, informed consent, and transparency. Ethical training can provide researchers and publishers with the requisite knowledge to employ AI chatbots in an ethical fashion. Finally, comprehending the influence of AI across different sectors can shape the implementation of AI chatbots within those domains, including research and publication.

- However, there are gaps that future research needs to address. Empirical research on the actual use and impact of AI chatbots in research and publication is needed. Additionally, more effort is required to develop and implement ethical guidelines or codes of conduct for AI chatbots. Finally, research is necessary to train researchers and publishers in the ethical use of AI chatbots. These areas offer opportunities for future research to enhance the ethical application of AI chatbots in research and publication. The use of AI and chatbots in education and research introduces challenges related to security, accuracy, and data protection. Their deployment should be accompanied by ongoing research into their ethical implications, along with the establishment of appropriate regulations to ensure their effective integration into existing systems.

ChatGPT with Citations

- The integration of AI and chatbots in education and research has brought about a paradigm shift in various sectors. These technologies, while offering immense potential benefits, also pose significant ethical challenges. It is crucial to develop a comprehensive understanding of these ethical implications to ensure the responsible and ethical use of AI, autonomous systems, and chatbots.

- However, a recent study by Hur and Yun [38] highlights concerns regarding the current training in research and publication ethics within academic institutions. Considering their significance for maintaining ethical standards in academia, the sporadic and brief nature of these training sessions calls into question their efficacy in adequately instructing researchers and publishers.

- Additionally, the uniformity of training content across various academic fields implies a lack of customization to address the specific ethical considerations inherent to each profession. Clearly, there is a need for specialized training programs that address the distinct ethical issues within different scientific communities. Regarding AI and chatbots, how can we ensure their ethical use in education and research? What are the principal ethical challenges faced by AI-powered devices, such as autonomous vehicles, and how can we effectively confront these challenges? Additionally, how can we steer future research in the field of AI ethics, and what role does the identification of key concepts and terms play in this endeavor? Furthermore, how does the incorporation of chatbots into educational settings affect the ethical landscape?

- These considerations are foundational to our examination of AI and chatbot ethics, and the studies discussed in the following section shed light on these areas. They explore various aspects of AI ethics, including the development of ethical guidelines and principles for autonomous machines and chatbots, the challenges of teaching ethics to machines, the role of keyword identification in guiding AI ethics research, and the impact of AI and chatbots on education.

Current State of Research and Publication Ethics Training in Academic Organizations

- The integration of AI technologies, including tools like chatbots, into the realm of research and publication, is driving significant transformations and sparking fresh ethical considerations. These AI capabilities hold promise for enhancing efficiency and accuracy, but simultaneously pose substantial ethical quandaries that must be navigated. In response to these emerging challenges, notable organizations such as Elsevier and the Committee on Publication Ethics (COPE) have stepped up to lead discussions and formulate guidelines. Elsevier has taken a unique approach to address AI’s role in research and publication [39]. Their policy focuses on the evolving definition of authorship in the AI era, emphatically stating that the responsibilities associated with authorship can only be fulfilled by human entities. Furthermore, it delves into research design and copyright concerns that are closely linked to AI’s integration into the scholarly environment. These key points are summarized in Table 2, which addresses the central questions on AI usage in research and publication. In contrast, COPE’s guidelines encompass a broader spectrum of AI implications. They consider both the opportunities and challenges brought by AI, from its potential to enhance research integrity and precision to the thorny issues around the creation of AI-generated papers and AI’s reliability. A cornerstone of their discussion is the assertion that human authors must assume full responsibility for the content produced by AI tools. These important details are displayed in Table 3 [40–44].

- The perspectives offered by Elsevier and COPE each bring unique insights to the table, collectively forming a complex dialogue that is shaping our understanding of the ethical role of AI in research and publication. In this section, we will explore these discussions in greater depth, identifying key points and extrapolating their implications for the ethics of research and publication in the AI age.

- Elsevier’s policy [39] prohibits the crediting of AI and AI-assisted tools as authors, as authorship entails obligations and accountability that only human beings can fulfill. These responsibilities include ensuring the integrity of the work, approving the final version, and verifying originality, as well as assuming legal liability, among other duties. It is worth noting that Elsevier’s policy is adaptable and may evolve as generative AI and AI-enhanced technologies advance. Tasks such as grammar and spelling check and reference management are not included in this policy and may be utilized without disclosure. The policy specifically addresses the use of generative AI tools, such as large language models, in the scientific writing process. In essence, AI tools can be employed in research design or certain experimental procedures, but their use must be explicitly described in the Methods section.

- If AI-assisted tools are used during the writing process, authors must include a declaration specifying the tool used and the reason for its use. It should be noted that authors are not permitted to use AI tools to create or modify images in their writing, except when such use is an integral part of the research design or methodology. Any such use must be clearly explained in the manuscript. Regarding copyright, Elsevier’s authorship policy does not allow AI and AI-assisted tools to be listed as authors. If they are used, their involvement must be clearly disclosed in a separate section, and authors must adhere to the standard publishing agreement process. This process involves either transferring or retaining copyright, depending on the article type.

- Both the position statement [40] and Levene’s study [41] on AI and authorship claim that AI (tools) cannot be considered as authors, as they cannot meet the criteria for authorship. They have taken various approaches to reach this conclusion. The position statement [40] emphasizes the legal and ethical responsibilities that AI tools cannot fulfill, while Levene’s exploration [41] focuses on the limitations of AI tools in terms of reliability and truthfulness. Both studies underscore the need for human authors to take full responsibility for the content produced by AI tools.

- The issues that AI poses for research integrity are highlighted in both the COPE forum on AI and fake papers [42], and a guest editorial on the challenge of AI chatbots for journal editors [43]. In the COPE forum [42], the use of AI in the creation of fake papers and the need for improved methods of detecting fraudulent research were discussed. The guest editorial [43], in contrast, explained the challenges that AI chatbots present for journal editors, including difficulties in plagiarism detection (which is further detailed in a later section on chatbot detectors). These discussions collectively suggest that human judgment, along with the use of appropriate software, is strongly recommended to address these challenges.

- The COPE webinar on trustworthy AI for the future of publishing [44] provided a broader perspective on the ethical issues related to the application of AI in editorial publishing processes. This webinar explored the benefits of AI in enhancing efficiency and accuracy in processing, but it also emphasizes key ethical concerns such as prejudice, fairness, accountability, and explainability. The COPE webinar complemented previous discussions by emphasizing the need for trustworthy AI in the publication process.

- The aforementioned discussions suggest that although AI tools can improve efficiency and accuracy in the research and publication process, they also pose substantial ethical challenges. Future research could concentrate on developing guidelines for using AI tools, employing AI detection tools to identify fraudulent research, addressing potential biases in AI-generated content, and managing the ethical implications of AI use in decision-making steps within research and publication processes.

- Chatbot detectors

- In scientific and academic communities, ChatGPT has received mixed responses reflecting the history of debates regarding the benefits and risks of advanced AI technologies [45,46]. On one hand, ChatGPT and other large language models (LLMs) can be useful for conversational and writing tasks, helping to improve the effectiveness and accuracy of the required output [47,48]. On the other hand, concerns have been raised in relation to potential bias based on the datasets used in chatbot training, which may limit its capabilities and could result in factual inaccuracies that alarmingly appear to be scientifically plausible (a phenomenon termed “hallucinations”) [49]. Additionally, the dissemination of misinformation using LLMs raises security concerns, including the potential for cyber-attacks, which should also be considered [50].

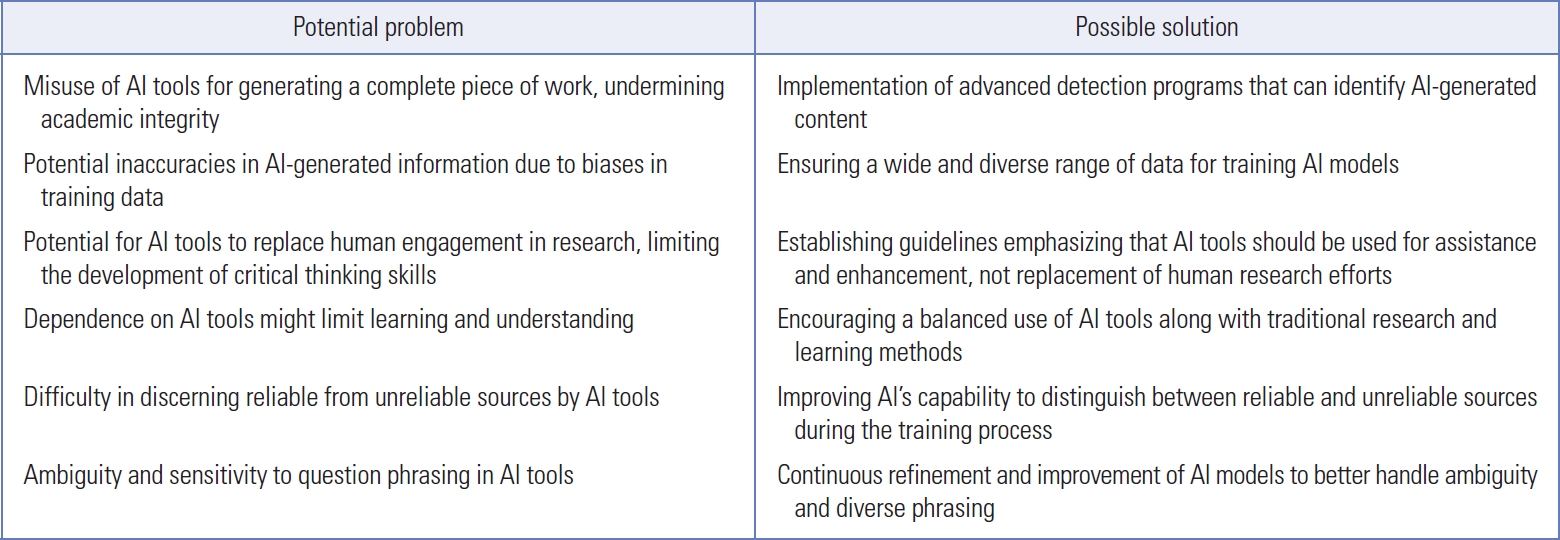

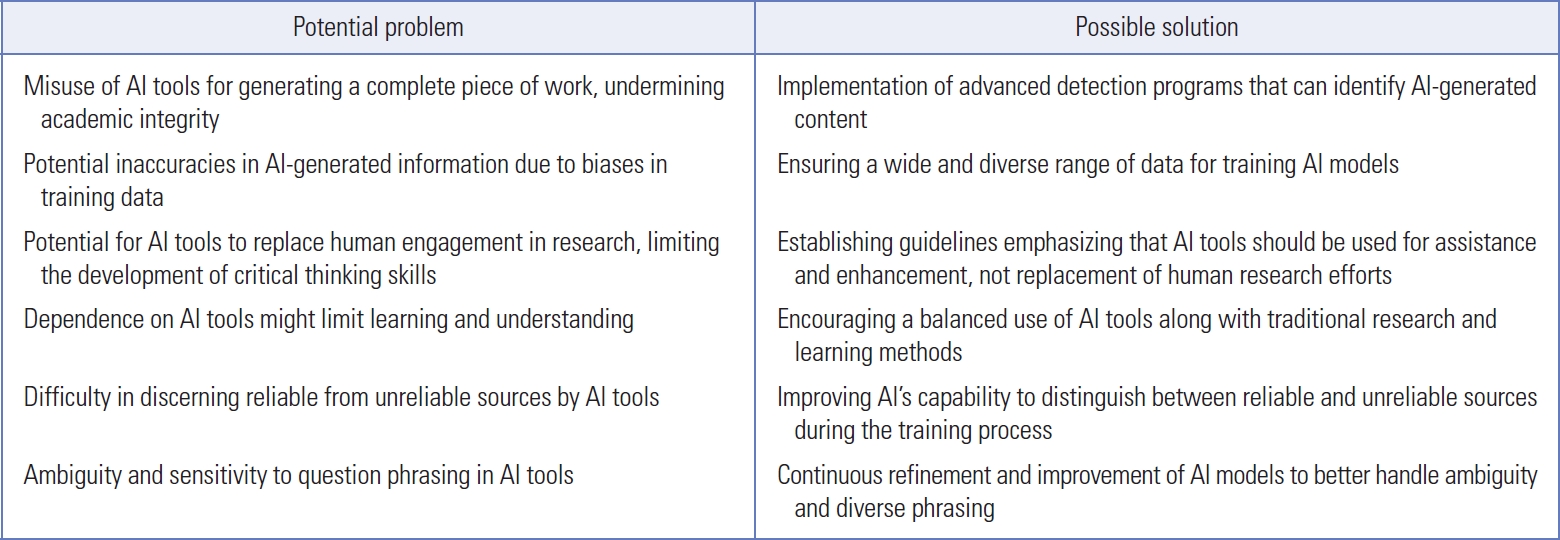

- In academia, students across various disciplines frequently encounter a wide range of questions, from simple clarifications to intricate academic inquiries. Tools like ChatGPT, powered by an LLM-based transformer model, can provide relevant information and potential solutions. However, a prevalent concern is that students may misuse these tools. Instead of using AI-based assistance for refining and enhancing their work, there is a risk of students using it to generate their entire assignments, thereby compromising the learning process and academic integrity. To address these concerns, the implementation of sophisticated detection programs can be considered. These programs could help identify content generated through AI-assisted tools, thereby promoting the responsible use of such technologies in academia. It may also be useful to compile a comprehensive table that outlines potential problems associated with AI tool usage in academic settings, citing the source of these issues and proposing potential solutions. These obstacles and responses in the application of AI technologies in academic research are illustrated in Fig. 2.

- While one might argue that certain chatbot detectors can be employed to identify documents created by chatbots, their reliability in delivering accurate results is questionable. At best, their accuracy remains a matter of doubt. For example, a GPT-2 Output Detector Demo (https://openai-openai-detector.hf.space/) incorrectly indicated that a copied blog post had a 92.54% probability of being original. Similarly, a poem composed by ChatGPT was erroneously classified as genuine upon submission. These inconsistencies highlight that such detectors are not yet dependable for discerning chatbot-generated text. Consequently, it is crucial to approach the use of chatbot-generated text with caution and not to rely solely on detectors [51].

- AI chatbots like ChatGPT present significant challenges, despite being transformative across sectors like education and healthcare [52]. They often struggle to distinguish between reliable and unreliable sources, echoing previous concerns about potential inaccuracies or “hallucinations” due to biases in their training data. The growing use of AI algorithms in the research and publication ecosystem has raised substantial concerns about integrity issues [53,54]. This mirrors the previously highlighted risk of misuse, particularly where research scientists might be tempted to rely on these tools to generate their entire body of work, thereby compromising the research process. Moreover, these AI systems can be overly sensitive to the phrasing of questions and may falter when faced with ambiguous prompts, further fueling doubts about the reliability of chatbot detectors. To mitigate these multifaceted concerns, one potential solution could be to encourage users, especially junior scientists, to provide citations for AI-generated content. This would underscore the importance of using these advanced technologies responsibly and ethically [47,55].

Publication Ethics and Issues Posed by AI and Chatbots

- Numerous AI tools and services have emerged in the digital landscape of academic research, promising to fundamentally alter the way we analyze and create content. These tools employ sophisticated technologies, such as machine learning and NLP, to perform a wide array of tasks. These technologies are revolutionizing both industry and academia by generating text that mimics human speech, categorizing information, and detecting plagiarism. This article explores some of the notable tools that are currently making a significant contribution to the development of the AI domain. Table 4 provides details of these AI tools and services.

- GPTZero (GPTZero Inc)

- Designed by EleutherAI, a nonprofit organization committed to broadening the accessibility of AI technologies, GPTZero is a language model constructed entirely from the ground up. Instead of relying on existing datasets or language models, GPTZero was trained on a distributed computing system. With six billion parameters, it is significantly smaller than the 175-billion-parameter model of GPT-3. Nevertheless, GPTZero exhibits a commendable ability to generate human-like text and is available for public usage as an open-source initiative.

- AI Text Classifier (OpenAI)

- This refers to a machine learning model programmed to categorize text into distinct groups based on the nature of its content. The model scrutinizes the text to discern patterns and distinctive features representative of each category. It is trained using a dataset where each text sample is associated with its respective category. Once the training phase is complete, the model can allocate new text samples to suitable categories.

- Academic AI Detector (PubGenius Inc)

- This is a device capable of discerning whether a piece of academic writing has been composed by a human or generated by an AI system. The tool performs this function by evaluating certain aspects of the text, including syntax, semantics, and coherence. It is particularly useful in identifying instances of academic plagiarism.

- Hive Moderation (Hive)

- This is a content moderation service that combines the capabilities of AI and human moderators to oversee and regulate user-generated content on various online platforms. Machine learning algorithms are deployed to flag potential harmful or inappropriate content, which is then passed on to human moderators for a final review.

- Copyleaks (Copyleaks Technologies Ltd)

- This is an AI-driven plagiarism detection software capable of identifying instances of plagiarism within a text. The software compares the input text against a comprehensive database of online content to find any matching content.

- Writer’s AI Content Detector (Writer Inc)

- This tool employs AI and NLP technologies to assess written content and offer suggestions for enhancement. The tool can spot issues related to grammar, sentence structure, spelling errors, and readability, making it a useful resource for writers, editors, and content creators to enhance their written content.

- Crossplag AI Content Detector (Crossplag LLC)

- Similar to CopyLeaks, Crossplag is a plagiarism detection tool that utilizes AI and machine learning to identify plagiarism in textual content. By comparing the text against a comprehensive online content database, any matches or similarities can be found [56].

Chatbot Detection Applications

- The integration of AI and chatbots across various sectors presents both intriguing potential and significant challenges. Specifically, their application has transformed industries such as data analysis, customer service, and academic research, while also raising complex ethical and integrity-related issues.

- The use of AI and chatbots in academic research has significantly improved both efficiency and accuracy. Nevertheless, the advent of these technologies has also prompted significant ethical concerns regarding authorship, the creation of synthetic content, and the dependability of AI-generated information. Consequently, navigating this changing terrain in adherence to the guidelines and regulations set forth by organizations such as Elsevier and COPE has become imperative. Moreover, the potential misuse of AI and chatbots, especially in matters of authorship, highlights the pressing need for advanced detection programs. These programs are essential in identifying AI-generated content, thereby fostering the responsible application of such technologies and maintaining the integrity of scholarly publications.

- This narrative is further complicated by the novel concept of infusing empathy into chatbots and AI. As detailed in the section of this manuscript on ethical AI, the integration of empathy could markedly enhance interactions between AI and humans across various domains, particularly in research, education, and ethics training for publication. However, this potential advancement does not diminish the essential role of human experts in overseeing the ethical and societal ramifications of these technologies.

- While the journey toward empathic AI presents inherent obstacles, such as potential conflicts between empathic responsibilities and functional goals, the potential benefits are substantial. Empathic AI could help uphold research and publication ethics by overcoming the constraints of human empathy, thanks to its scalable cognitive complexity. It is equally crucial to recognize the role of existing scientific infrastructure in nurturing and upholding research integrity.

- The use of AI and chatbots in research and scholarly communities necessitates a careful balance between their vast potential and the associated issues related to ethics, security, and integrity. This requires strengthened ethics training and clear guidelines regarding AI’s role in authorship. We must trust these systems while remaining cognizant of the challenges they pose, as the exciting prospect of empathic AI could significantly impact ethical standards. It is imperative to promote ongoing discussion, regulation, and research as AI and chatbot technologies evolve and infiltrate various industries, ensuring we navigate this complex yet promising terrain successfully. With the advent of empathic AI, vigilance in maintaining research integrity and ethical considerations is crucial, guaranteeing that these cutting-edge developments genuinely benefit human well-being and societal advancement.

Conclusion and Future Prospects

-

Conflict of Interest

Cheol-Heui Yun serves as the Ethics Editor of Science Editing since 2020, but had no role in the decision to publish this article. No other potential conflict of interest relevant to this article was reported.

-

Funding

This work was partially supported by a grant from the National Research Foundation of Korea (NRF; No. NRF-2023J1A1A 1A01093462).

-

Data Availability

Data sharing is not applicable to this article as no new data were created or analyzed.

Notes

Supplementary Materials

| Focus | Key point | Reference |

|---|---|---|

| Ethical behavior of machines | Proposed a paradigm of case-supported principle-based behavior. | [29] |

| Emphasis on defining ethical guidelines for autonomous machines. | ||

| Ethical decisions by machines | Challenges faced by AI-equipped machines like autonomous cars. | [30] |

| Minimal requirement to teach machines ethics based on traditional human choices. | ||

| AI governance and techniques | Proposed a taxonomy: | [31] |

| (1) Exploring ethical conundrums | ||

| (2) Individual ethical decision frameworks | ||

| (3) Collective ethical decision frameworks | ||

| (4) Ethics in human-AI interactions | ||

| Highlighted key techniques and future research directions. | ||

| AI ethics discourse | Emphasis on language and terminology in AI ethics through a keyword-based systematic mapping study. | [32] |

| Importance of specific concepts and their definitions. | ||

| AI ethics in research | Quantitative analysis of ethics-related research in leading AI and robotics venues. | [33,34] |

| Emphasis on the need for feasible ethical regulations in the face of rapid tech advancements. | ||

| AI and education | Importance of ethical training for students. | [23,35] |

| Emphasis on proactive education for ethical decision-making. | ||

| Assessment of AI’s impact on educational structures and processes. |

| Topic | Description |

|---|---|

| Authorship and AI tools, COPE position statement [40] | This position statement emphasizes the legal and ethical responsibilities that AI tools cannot fulfill and underscores the need for human authors to take full responsibility for the content produced by AI tools. |

| AI and authorship [41] | Levene’s study [41] focuses on the limitations of AI tools in terms of reliability and truthfulness. It asserts that AI tools cannot meet the criteria for authorship and backs the need for human authors to be fully responsible for AI-generated content. |

| AI and fake papers [42] | This study discusses the use of AI in creating fake papers and highlights the need for improved means to detect fraudulent research. It implies the need for human judgment, in addition to the use of suitable software, to overcome these challenges. |

| The challenge of AI chatbots for journal editors [43] | The guest editorial elaborates on the challenges that AI chatbots pose for journal editors, including issues with plagiarism detection. It suggests the application of human judgment and suitable software to overcome these challenges. |

| Trustworthy AI for the future of publishing [44] | The COPE webinar offers a broader perspective on the ethical issues related to AI’s application in editorial publishing processes. It explores AI’s benefits in enhancing efficiency and accuracy, while also emphasizing key ethical concerns such as prejudice, fairness, accountability, and explainability. The webinar highlights the necessity for trustworthy AI in the publication process. |

| AI tool | Description |

|---|---|

| GPTZero (GPTZero Inc; https://gptzero.me/) | Offers clarity and transparency into the use of AI in the classroom, predicts whether a document was written by a large language model, provides AI-generated content detection in educational settings, assesses of AI’s role in creating educational materials. |

| AI Text Classifier (OpenAI; https://openai.com/blog/new-ai-classifier-for-indicating-ai-written-text) | Specialized for distinguishing between human and AI-written text, utilizing a fine-tuned GPT model. |

| Academic AI Detector (PubGenius Inc; https://typeset.io/ai-detector) | Specifically designed to identify AI-generated academic texts, plagiarism detection, academic integrity checks. |

| Hive Moderation (Hive; https://hivemoderation.com/ai-generated-content-detection) | Offers real-time identification and origin tracing of AI-generated content, detecting plagiarism, allowing them to enforce academic integrity, supports digital platforms in implementing site-wide bans on AI-generated media, and enables social platforms to create new filters to identify and tag AI-generated content. |

| Copyleaks (Copyleaks Technologies Ltd; https://copyleaks.com/) | Scans the internet for potential plagiarism, available in multiple languages, academic integrity, copyright protection. |

| Writer’s AI Content Detector (Writer Inc; https://writer.com/ai-content-detector/) | Potential AI-generated content detection and authenticity checks. |

| Crossplag AI Content Detector (Crossplag LLC; https://crossplag.com/ai-content-detector/) | Combines AI detection with plagiarism checking for comprehensive content analysis. |

- 1. Sayed WS, Noeman AM, Abdellatif A, et al. AI-based adaptive personalized content presentation and exercises navigation for an effective and engaging E-learning platform. Multimed Tools Appl 2023;82:3303-33.https://doi.org/10.1007/s11042-022-13076-8. ArticlePubMedPMC

- 2. Jiang R. How does artificial intelligence empower EFL teaching and learning nowadays? A review on artificial intelligence in the EFL context. Front Psychol 2022;13:1049401. https://doi.org/10.3389/fpsyg.2021049401. ArticlePubMedPMC

- 3. Wang H, Tlili A, Huang R, et al. Examining the applications of intelligent tutoring systems in real educational contexts: a systematic literature review from the social experiment perspective. Educ Inf Technol (Dordr) 2023;28:9113-48.https://doi.org/10.1007/s10639-022-11555-x. ArticlePubMedPMC

- 4. Kumar K, Kumar P, Deb D, Unguresan ML, Muresan V. Artificial intelligence and machine learning based intervention in medical infrastructure: a review and future trends. Healthcare (Basel) 2023;11:207. https://doi.org/10.3390/healthcare11020207. ArticlePubMedPMC

- 5. Pillai SV, Kumar RS. The role of data-driven artificial intelligence on COVID-19 disease management in public sphere: a review. Decision 2021;48:375-89.https://doi.org/10.1007/s40622-021-00289-3. ArticlePMC

- 6. Kalpana, Srivastava A, Jha S. Data-driven machine learning: a new approach to process and utilize biomedical data. In: Roy S, Goyal LM, Balas VE, Agarwal B, Mittal M, editors. Predictive modeling in biomedical data mining and analysis. Elsevier; 2022. p. 225–52.

- 7. Li M, Chen Y. Using artificial intelligence assisted learning technology on augmented reality-based manufacture workflow. Front Psychol 2022;13:859324. https://doi.org/10.3389/fpsyg.2022.859324. ArticlePubMedPMC

- 8. Park W, Kwon H. Implementing artificial intelligence education for middle school technology education in Republic of Korea. Int J Technol Des Educ 2023;Feb. 20. [Epub]. https://doi.org/10.1007/s10798-023-09812-2. Article

- 9. Lai T, Xie C, Ruan M, Wang Z, Lu H, Fu S. Influence of artificial intelligence in education on adolescents’ social adaptability: the mediatory role of social support. PLoS One 2023;18:e0283170. https://doi.org/10.1371/journal.pone.0283170. ArticlePubMedPMC

- 10. Adamopoulou E, Moussiades L. An overview of chatbot technology. In: Maglogiannis I., Iliadis L, Pimenidis E, editors. Artificial intelligence applications and innovations. Proceedings of the 16th IFIP WG 12.5 International Conference, AIAI 2020; 2020 Jun 5–7; Neos Marmaras, Greece. Springer Cham; 2020. p. 373–83. https://doi.org/1007/978-3-030-49186-4_31.

- 11. Sheehan B, Jin HS, Gottlieb U. Customer service chatbots: anthropomorphism and adoption. J Bus Res 2020;115:14-24.https://doi.org/10.1016/j.jbusres.2020.04.030. Article

- 12. Smutny P, Schreiberova P. Chatbots for learning: a review of educational chatbots for the Facebook Messenger. Comput Educ 2020;151:103862. https://doi.org/10.1016/j.compedu.2020.103862. Article

- 13. Fitzpatrick KK, Darcy A, Vierhile M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): a randomized controlled trial. JMIR Ment Health 2017;4:e19. https://doi.org/10.2196/mental.7785. ArticlePubMedPMC

- 14. Klímová B, Ibna Seraj PM. The use of chatbots in university EFL settings: research trends and pedagogical implications. Front Psychol 2023;14:1131506. https://doi.org/10.3389/fpsyg.2023.1131506. ArticlePubMedPMC

- 15. Merelo JJ, Castillo PA, Mora AM, et al. Chatbots and messaging platforms in the classroom: an analysis from the teacher’s perspective. Educ Inf Technol (Dordr) 2023;May. 24. [Epub]. https://doi.org/10.1007/s10639-023-11703-x. Article

- 16. Kumar JA. Educational chatbots for project-based learning: investigating learning outcomes for a team-based design course. Int J Educ Technol High Educ 2021;18:65. https://doi.org/10.1186/s41239-021-00302-w. ArticlePubMedPMC

- 17. Moldt JA, Festl-Wietek T, Madany Mamlouk A, Nieselt K, Fuhl W, Herrmann-Werner A. Chatbots for future docs: exploring medical students’ attitudes and knowledge towards artificial intelligence and medical chatbots. Med Educ Online 2023;28:2182659. https://doi.org/10.1080/10872981.2023.2182659. ArticlePubMedPMC

- 18. Wollny S, Schneider J, Di Mitri D, Weidlich J, Rittberger M, Drachsler H. Are we there yet?: a systematic literature review on chatbots in education. Front Artif Intell 2021;4:654924. https://doi.org/10.3389/frai.2021.654924. ArticlePubMedPMC

- 19. Finwin Technologies. What are the different types of chatbots? [Internet]. Medium; 2020 [cited 2023 Jun 5]. Available from: https://medium.com/finwintech/what-are-thedifferent-types-of-chatbots-c99cdd6b3248.

- 20. Stokel-Walker C. ChatGPT listed as author on research papers: many scientists disapprove. Nature 2023;613:620-1.https://doi.org/10.1038/d41586-023-00107-z. ArticlePubMed

- 21. van Dis EA, Bollen J, Zuidema W, van Rooij R, Bockting CL. ChatGPT: five priorities for research. Nature 2023;614:224-6.https://doi.org/10.1038/d41586-023-00288-7. ArticlePubMed

- 22. Garzcarek U, Steuer D. Approaching ethical guidelines for data scientists [Preprint]. Posted 2019 Jan 14. arXiv 1901.04824. https://doi.org/10.48550/arXiv.1901.04824.

- 23. Goldsmith J, Burton E. Why teaching ethics to AI practitioners is important. In: Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence; 2017 Feb 4–9; San Francisco, CA, USA. Proc AAAI Conf Artif Intell 2017;31:4836-40.https://doi.org/10.1609/aaai.v31i1.11139. Article

- 24. Burton E, Goldsmith J, Koenig S, Kuipers B, Mattei N, Walsh T. Ethical considerations in artificial intelligence courses. AI Mag 2017;38:22-34.https://doi.org/10.1609/aimag.v38i2.2731. Article

- 25. Følstad A, Araujo T, Law EL, et al. Future directions for chatbot research: an interdisciplinary research agenda. Computing 2021;103:2915-42.https://doi.org/10.1007/s00607-021-01016-7. ArticlePMC

- 26. Sidlauskiene J, Joye Y, Auruskeviciene V. AI-based chatbots in conversational commerce and their effects on product and price perceptions. Electron Mark 2023;33:24. https://doi.org/10.1007/s12525-023-00633-8. ArticlePubMedPMC

- 27. Crespi S, McLean K, Lloreda CL. The AI special issue, adding empathy to robots, and scientists leaving Arecibo [Internet]. Science; 2023 [cited 2023 Jun 20]. Available from: https://doi.org/10.1126/science.adj7011. Article

- 28. Christov-Moore L, Reggente N, Vaccaro A, et al. Preventing antisocial robots: a pathway to artificial empathy. Sci Robot 2023;8:eabq3658. https://doi.org/10.1126/scirobotics.abq3658. ArticlePubMed

- 29. Anderson M, Anderson SL. Toward ensuring ethical behavior from autonomous systems: a case-supported principle-based paradigm. Ind Rob 2015;42:324-31.https://doi.org/10.1108/IR-12-2014-0434. Article

- 30. Etzioni A, Etzioni O. Incorporating ethics into artificial intelligence. J Ethics 2017;21:403-18.https://doi.org/10.1007/s10892-017-9252-2. Article

- 31. Yu H, Shen Z, Miao C, Leung C, Leser VR, Yang Q. Building ethics into artificial intelligence. In: Lang J, editor. Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence; 2018 Jul 13–19; Stockholm, Sweden. p. 5527–33. Jul 13–19; Stockholm, Sweden. p. 5527–33. https://doi.org/10.24963/ijcai.2018/779.

- 32. Vakkuri V, Abrahamsson P. The key concepts of ethics of artificial intelligence: a keyword based systematic mapping study. In: 2018 IEEE International Conference on Engineering, Technology and Innovation (ICE/ITMC); 2018 Jun 17–20; Stuttgart, Germany. p. 1–6. https://doi.org/10.1109/ICE.2018.8436265.

- 33. Prates M, Avelar P, Lamb LC. On quantifying and understanding the role of ethics in AI research: a historical account of flagship conferences and journals. EPiC Ser Comput 2018;55:188-201.https://doi.org/10.29007/74gj. Article

- 34. Boddington P. Towards a code of ethics for artificial intelligence. Springer Cham; 2017:https://doi.org/10.1007/978-3-319-60648-4.

- 35. Chen L, Chen P, Lin Z. Artificial intelligence in education: a review. IEEE Access 2020;8:75264-78.https://doi.org/10.1109/ACCESS.2020.2988510. Article

- 36. scite. Assistant [Internet]. scite; c2023 [cited 2023 Jul 2]. Available from: https://scite.ai/assistant.

- 37. Nakov PI, Schwartz A, Hearst MA. Citances: citation sentences for semantic analysis of bioscience text. In: Proceedings of the SIGIR 04 Workshop on Search and Discovery in Bioinformatics; 2004.

- 38. Hur Y, Yun CH. Current status and demand for educational activities on publication ethics by academic organizations in Korea: a descriptive study. Sci Ed 2023;10:64-70.https://doi.org/10.6087/kcse.298. Article

- 39. Elsevier. The use of AI and AI-assisted writing technologies in scientific writing [Internet]. Elsevier; c2023 [cited 2023 Jul 8]. Available from: https://www.elsevier.com/about/policies/publishing-ethics/the-use-of-ai-and-ai-assistedwriting-technologies-in-scientific-writing.

- 40. Committee on Publication Ethics (COPE). Authorship and AI tools [Internet]. COPE; 2023 [cited 2023 Jul 4]. Available from: https://publicationethics.org/cope-positionstatements/ai-author.

- 41. Levene A. Artificial intelligence and authorship [Internet]. Committee on Publication Ethics (COPE); 2023 [cited 2023 Jul 4]. Available from: https://publicationethics.org/news/artificial-intelligence-and-authorship.

- 42. Eaton SE, Soulière M. Artificial intelligence (AI) and fake papers [Internet]. Committee on Publication Ethics (COPE); 2023 [cited 2023 Jul 6]. Available from: https://publicationethics.org/resources/forum-discussions/artificial-intelligence-fake-paper.

- 43. Watson R, Štiglic G. Guest editorial: the challenge of AI chatbots for journal editors [Internet]. Committee on Publication Ethics (COPE); 2023 [cited 2023 Jul 18]. Available from: https://publicationethics.org/news/challenge-aichatbots-journal-editors.

- 44. Committee on Publication Ethics (COPE). Seminar 2021: trustworthy AI for the future of publishing [Internet]. COPE; 2021 [cited 2023 Jul 18]. Available from: https://publicationethics.org/resources/seminars-and-webinars/artificialintelligence.

- 45. Tai MC. The impact of artificial intelligence on human society and bioethics. Tzu Chi Med J 2020;32:339-43.https://doi.org/10.4103/tcmj.tcmj_71_20. ArticlePubMedPMC

- 46. Howard J. Artificial intelligence: implications for the future of work. Am J Ind Med 2019;62:917-26.https://doi.org/10.1002/ajim.23037. ArticlePubMed

- 47. Gordijn B, Have HT. ChatGPT: evolution or revolution? Med Health Care Philos 2023;26:1-2.https://doi.org/10.1007/s11019-023-10136-0. ArticlePubMed

- 48. Else H. Abstracts written by ChatGPT fool scientists. Nature 2023;613:423. https://doi.org/10.1038/d41586-023-00056-7. ArticlePubMed

- 49. Deng J, Lin Y. The benefits and challenges of ChatGPT: an overview. Front Comput Intell Syst 2022;2:81-3.https://doi.org/10.54097/fcis.v2i2.4465. Article

- 50. Huynh D, Hardouin J. PoisonGPT: how we hid a lobotomized LLM on Hugging Face to spread fake news [Internet]. Mithril Security; 2023 [cited 2023 Jul 25]. Available from: https://blog.mithrilsecurity.io/poisongpt-how-wehid-a-lobotomized-llm-on-hugging-face-to-spread-fakenews/.

- 51. Miller M. ChatGPT, chatbots and artificial intelligence in education [Internet]. Ditch That Textbook; 2022 [cited 2023 Aug 1]. Available from: https://ditchthattextbook.com/ai/.

- 52. Shen Y, Heacock L, Elias J, et al. ChatGPT and other large language models are double-edged swords. Radiology 2023;307:e230163. https://doi.org/10.1148/radiol.230163. ArticlePubMed

- 53. Kitamura FC. ChatGPT is shaping the future of medical writing but still requires human judgment. Radiology 2023;307:e230171. https://doi.org/10.1148/radiol.230171. ArticlePubMed

- 54. Graham F. Daily briefing: will ChatGPT kill the essay assignment? [Internet]. Nature 2022;[cited 2023 Aug 4]. Available from: https://doi.org/10.1038/d41586-022-04437-2. Article

- 55. Gandhi Periaysamy A, Satapathy P, Neyazi A, Padhi BK. ChatGPT: roles and boundaries of the new artificial intelligence tool in medical education and health research: correspondence. Ann Med Surg (Lond) 2023;85:1317-8.https://doi.org/10.1097/MS9.0000000000000371. ArticlePubMedPMC

- 56. Crossplag. AI content detector [Internet]. Crossplag; c2023 [cited 2023 Aug 5]. Available from: https://crossplag.com/ai-content-detector/.

References

Figure & Data

References

Citations

KCSE

KCSE

PubReader

PubReader ePub Link

ePub Link Cite

Cite