Influence of the top 10 journal publishers listed in Journal Citation Reports based on six indicators

Article information

Abstract

Purpose

An accurate evaluation of the influence of the largest publishers in world journal publishing is a starting point for negotiating journal subscriptions and an important issue for research libraries. This study was conducted to evaluate the influence of the largest publishers based on Journal Citation Reports (JCR) indicators.

Methods

From JCR 2014 to 2018 data, a unique journal list by publisher was created in Excel. The top 10 publishers were selected and evaluated in terms of the average share of six JCR indicators including the impact factor, Eigenfactor score, and article influence score, along with the number of journals, articles, and citations.

Results

The top three publishers accounted for about 50% of the JCR indicators, the top five for 60%, and the top 10 for 70%. Therefore, the concentration of the top three publishers, with a share exceeding 50% for five indicators, was more intensive than has been reported in previous studies. For the top 10 publishers, not only the number of journals and articles, but also citations and the impact factor, which reflect the practical use of journals, were increasing.

Conclusion

These evaluation results will be important to research libraries and librarians in deciding upon journal subscriptions using publisher information, to journal publishers trying to list their journals in JCR, and to consortium operators to negotiate strategically. Using the unique journal list created in this research process, various follow-up studies are possible. However, it is also urgent to build a standardized world journal list with accurate information.

Introduction

Background/rationale: Academic journals published by over 8,000 publishers around the world are listed in Scopus by Elsevier and Web of Science (WoS) and Journal Citation Reports (JCR) by Clarivate Analytics. The largest commercial publishers are known to have a major influence on journal publishing. Properly grasping the influence of the largest publishers is the starting point for negotiating journal subscriptions, but it is difficult to know the exact situation. Libraries and librarians in various countries have been negotiating journal subscriptions with the largest publishers, without a clear sense of the influence and status of publishers. The same issue occurred during the negotiation of the Korean Electronic Site License Initiative. The authors have made various efforts in the Korean Electronic Site License Initiative negotiations, including studying alternatives to the big deal model [1,2]. However, the status of the largest publishers remains unknown.

It is also an important issue for research libraries to accurately evaluate journals and publishers and to properly understand and utilize the results of that evaluation in their work. The JCR has data on WoS-based journals and articles, so if the publisher imprints are accurately identified, the largest publishers can be roughly evaluated. Most studies of the influence of the largest publishers have dealt with the number of journals, articles, and citations. However, few studies have evaluated journal publishers in a more complex manner, using the impact factor (IF), Eigenfactor score (ES), and article influence score (AIS) [3,4], although a previous study evaluated publishers by JCR indicators [5].

Objectives: The goal of this study is to evaluate the influence of the largest journal publishers listed in JCR. To conduct both a qualitative and quantitative evaluation, six JCR indicators were applied, including the IF, ES, and AIS, along with the number of journals, articles, and citations. This study clearly documents the status of each publisher and the dominance of the largest publishers, and therefore, its results will serve multiple purposes. Publishers will have an opportunity to review their position, and it can be used as a basis for subscription plans for librarians and for negotiation strategies for consortium operators.

In JCR’s journal and article data, how much influence do the largest journal publishers have? To answer this question, JCR indicators based on original research and review articles were analyzed, with a particular focus on the largest journal publishers. We collected the journal data listed in JCR and selected six indicators that were judged to be highly relevant to the publisher’s influence. On that basis, we selected the top 10 publishers.

Methods

Ethics statement: Neither institutional review board approval nor informed consent was needed because this study did not deal with human subjects.

Study design: This was a literature database-based descriptive study.

Data collection: To make a unique journal list, each journal list collected from JCR, WoS, and Scopus was combined using the VLOOKUP, IF, and FIND functions of Excel in the following order and method. Through this process, a unique journal list of 12,201 titles with six JCR indicators was created based on JCR data: downloading JCR 2014 to 2018 data to Excel; comparing the journal name and International Standard Serial Number to identify unique journal; combining the annual article number, citation count, IF, ES, and AIS for 5 years for each journal; downloading the journal list included in WoS and Scopus to obtain publisher information; combining the journal lists to confirm unique journal in order of JCR, WoS, and Scopus; grouping journals by publisher imprints (Suppl. 1); and finalizing the unique journal list while visually checking the combined list in Excel.

Statistical methods: This study was based on all target journals’ indicators, and only descriptive statistics were presented.

Results

Selection of the top 10 journal publishers in JCR

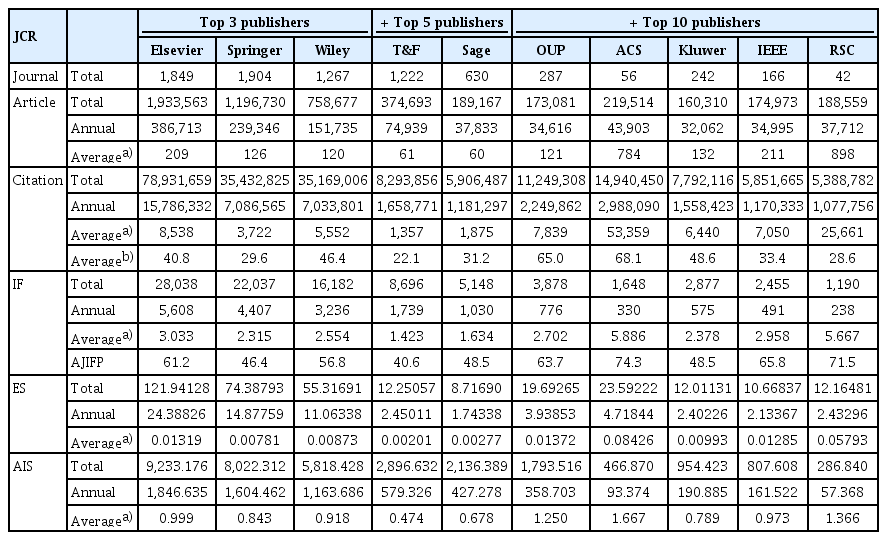

The citation rate is a valuable metric for assessing the influence of a journal in relation to other journals. Among various JCR indicators, this study applied six indicators, including journals, articles, and citations for a quantitative evaluation and IF, ES, and AIS for a qualitative evaluation. Each share of the six JCR indicators by publisher was calculated among 12,201 JCR journals and then, by averaging them without weight by publisher, the top three, top five, and top 10 publishers were selected (Table 1). Elsevier, Springer, and Wiley were classified as the top three publishers, and then Taylor & Francis (T&F) and Sage were added to the top five. Then, Oxford University Press (OUP), American Chemical Society (ACS), Wolters Kluwer (Kluwer), Institute of Electrical and Electronics Engineers (IEEE), and Royal Society of Chemistry (RSC) were added to the top 10. The top five were all commercial publishers, but there were several society publishers in the top 10. As this study focused on the top 10 publishers, all other publishers were described as non-top 10. For over 250 journals from JCR, it was not possible to find accurate information on the current publisher in WoS and Scopus, so the publisher column was left empty and they were classified as non-top 10 publishers.

Evaluation of the top 10 publishers by six JCR indicators

Comparing the top 10 publishers based on six JCR indicators, the difference in influence among them can be clearly seen (Table 2). Springer published the most journals, as it increased the number of its journals through frequent mergers and acquisitions, but Elsevier was still number one in the number of articles. In contrast, ACS and RSC, which published smallscale journals, took first place and second place in the average IF, ES, and AIS, as well as in the number of articles and citations per journal.

Looking at the annual number of articles in the JCR data, the top three publishers can be considered mega-publishers, publishing over 150,000 articles. In particular, Elsevier accounted for a 24.9% share of the total number of articles, publishing nearly 400,000 articles per year. The remaining six publishers produced about 30,000 to 50,000 articles annually, corresponding to a share of roughly 2%, except T&F with about 80,000 articles (4.8%). ACS and RSC had the smallest number of journals, but the annual number of articles per journal was about 800, much more than other publishers, indicating that they pursued efficient publishing with many articles in each journal. In contrast, T&F and Sage, which published about half of the social science journals, had the smallest number of articles in each journal, with an average of 60 articles. They were considered to pursue quantitative growth, focused on publishing small and diverse journals.

Elsevier, Wiley, OUP, ACS, and Kluwer had a strong influence on academia with a higher citation ratio than article ratio. ACS had the highest average number of citations per article (68.1), followed by OUP (65.0). ACS and RSC were the most influential in terms of the number of citations per journal, with an average IF of 5.886 for ACS and 5.667 for RSC. Among the top three publishers, the average IF of Elsevier journals was the highest (3.033), whereas Wiley published fewer journals and articles than Springer, but had a higher average IF, indicating more average citations per journal or article. The rest of the top 10 publishers had an average IF around the 2% level, but T&F and Sage were exceptions, with 1% levels. Although not adopted as one of the six JCR indicators, the average journal impact factor percentile (AJIFP) is a useful indicator. The AJIFP assesses a journal’s standing within the related subject categories, scaled from 0% to 100%. Springer, T&F, Sage, and Kluwer were ranked under 50%, while ACS and RSC had the highest AJIFP.

The ES indicator measures the journal’s importance to the research community for 5 years, so the sum of all journals’ ES is about 100. In terms of average ES, ACS and RSC showed significantly higher impacts than other publishers, but T&F and Sage marked lower impacts. The average ES of the remaining six publishers were similar, without significant differences. The AIS indicator reflects the average influence of a journal’s articles over the first 5 years after publication. Therefore, the AIS is roughly analogous to the 5-year IF. ACS, RSC, and OUP had higher average AIS than other publishers, while T&F had the lowest, and the remaining six publishers showed similar levels.

Comparison of the influence of the top 10 publishers versus others

Comparing the six JCR indicators between the largest publishers and the others, the influence of the largest publishers can be clearly seen (Table 3). The top five publishers accounted for more than half of JCR journals. The top three publishers accounted for precisely half of the total number of articles, while the top 10 accounted for about 70%.

Looking at the annual citations per journal, the top three publishers had an average of about 6,000, with a share of 50.8% of the total citations. The citation share increased significantly from the top five publishers to the top 10. In terms of the IF, the majority of the top 10 publishers had an average of roughly 2, and were found to have more influence than in terms of articles and citations. In terms of the average IF, ES, and AIS per journal, when looking at the gap between the top three, top five, and top 10 publishers and the others, the difference for the top 10 publishers showed a similar trend to the top three. However, in the top five publishers, the shares of ES and AIS indicators were anomalous.

Consequently, the top three publishers have secured their position as the largest publishers, with more than half of the citations and the IF, reflecting the practical use of their journals as well as external metrics such as the number of articles. In addition, the top 10 publishers also showed a strong influence of around 70% in the five indicators except the number of journals. Therefore, extending the focus of this study to encompass the top 10 publishers, instead of the top three or five, was worthwhile.

Comparison of the influence of the top three, top five, and top 10 publishers

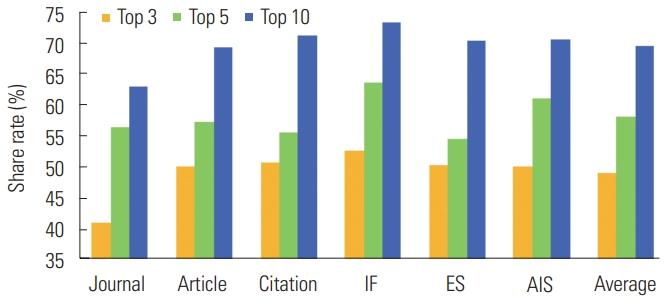

Fig. 1 shows the difference of the share of the six JCR indicators in the largest publishers. In the figure, the rightmost shows the average of the six JCR indicators for all JCR journals, making it easy to see which indicator is below or above the average. The number of journals was the least relevant indicator. The top five publishers were weak in terms of articles, citations, and the ES. However, it seemed that rather than the top five publishers, the top three and top 10 were highly similar in most indicators, clearly showing their position in the world journal publishing. The largest publishers were confirmed to be at the forefront of journal publishing, with the top three publishers accounting for about 50%, the top five for 60%, and the top 10 for 70%.

Share (percentage) of six Journal Citation Reports indicators for the Journal Article Citation IF ES AIS top three, top five, and top 10 publishers. The average was calculated by six indicators based on Journal Citation Reports 2014 to 2018. IF, impact factor; ES, Eigenfactor score; AIS, article influence score.

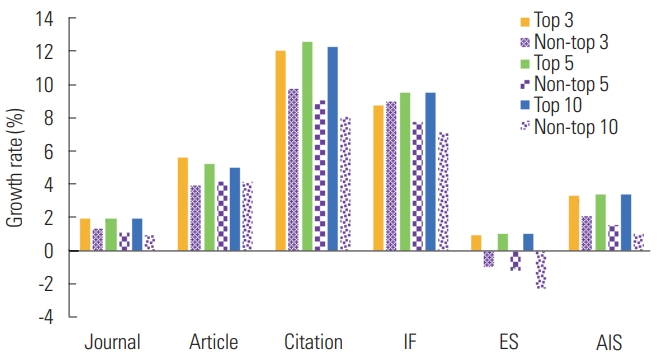

Comparing the growth rate of each indicator, the difference between the largest publishers and the others was clear (Fig. 2). According to the JCR 2014 and 2018, the growth rate for articles was higher than that for journals, and the growth rate for citations was higher than that for articles. The growth rate of ES and AIS was small, but similar to that of journals. However, for the ES indicator, all other publishers showed a negative increase. As such, the number of citations and IF showed higher growth rates than that of journals and articles, and the influence of the largest publishers was further strengthened qualitatively as drivers of the growth of JCR content.

Discussion

Evaluation and characteristics of the top 10 publishers: As shown in Table 2, the average number of citations per journal was higher for ACS and RSC than for Elsevier. OUP, IEEE, and Kluwer were cited more than Wiley or Springer. Compared with Elsevier, ACS showed a higher citation rate, with more than six times citations per journal and a higher number of citations per article. In contrast, T&F and Sage had very low influence in terms of citations. The influence of journal publishing is easily judged from external metrics, such as the number of journals or articles. However, when planning journal subscriptions, librarians need to consider citations and/or IF, which reflect the practical use of journals, rather than their external scale.

As a result of the evaluation based on the share of the six JCR indicators, the influence of the top three, five, and 10 publishers in JCR was 49.1%, 58.0%, and 69.4%, respectively. Among the top three, Elsevier’s share was 22.2%. The gap between the top three and top five was so large that it seemed like a stretch to call T&F and Sage as the top five publishers. The top 10 publishers had only a 20% increase over the top three, despite the addition of seven publishers. Ultimately, the top three publishers predominated, accounting for close to 50% of JCR indicators.

The concentration of the top three publishers: As the articles and journals of the largest publishers increased over the years, the average number of citations and IF were also increased; therefore, it was confirmed numerically that the largest publishers led the field of global journal publishing. According to a previous study that examined journal publishers from 1997 to 2009, six publishers produced more than 50% of journals, eight publishers accounted for more than 50% of articles, nine publishers did so for citations, and ten publishers did so for IF [5]. In this study, from 2014 to 2018, the three largest publishers produced more than 50% of the five JCR indicators except the number of journals, and four publishers published more than 50% of JCR journals. With frequent mergers and acquisitions between publishers, the concentration of the top three publishers has become stronger than the case 9 years ago. As journal publishing becomes more and more focused on the top three publishers, it is a very meaningful aspect of this study that it expanded the research scope to the top 10 publishers and evaluated them quantitatively and qualitatively based on six JCR indicators.

New findings from JCR indicator analysis: In the JCR, which contained 7,773,372 research and review articles from the past 5 years, the articles were cited 294,492,578 times over the last 5 years. Thus, authors cited each JCR article on average at least 37.9 times. This study makes a meaningful contribution by showing general trends in JCR articles and journals. It presents objective results obtained from large-scale data, unlike previous studies. In addition, as the average IF of JCR journals in 5 years was found to be 2.067, the average IF level of the major journals distributed through WoS can be grasped. This information is important for journal publishers who are trying to publish good journals suitable for being listed in JCR and WoS.

Limitation: In the process of journal integration, if the accuracy of the Excel VLOOKUP comparison was poor due to diversity in journal names, new International Standard Serial Number assignment, journal duplication, the presence or absence of a space in the title, and so on, duplicated journals were merged manually. When identifying a unique journal from each journal list produced from various sources, it was not easy to check whether the same journals were perfect matches. As experienced career librarians, we did our best to reduce errors. The journals that switched publishers were analyzed under the assumption that the current publisher had published all the past articles, because it is time-consuming and difficult to analyze the history of publisher changes by year. JCR indicators of null and “0” were excluded when calculating the averages. Given the lack of humanities journals in the JCR, a limitation of this study is that it only dealt with science, technology, and medicine journals, as well as some in the social sciences.

Conclusion: According to the average per JCR journal, the number of articles was 127, the number of citations 4,827, IF 2.067, ES 0.00819, and AIS 0.755. As the number of publishers included increased from the top three to the top five and top 10, the overall influence was found to be about 50%, 60%, and 70%, respectively. The top 10 publishers, especially the top three publishers, entirely overwhelmed other publishers. The concentration of the top three publishers was severe, as they led global journal publishing and even showed a major gap with T&F and Sage, who were added to the top five. The remaining five publishers included in the top 10 reflected all aspects of the largest publishers, with high citation ratios compared to journal and article ratios. Therefore, libraries and librarians need to pay special attention not only to journals published by the top five commercial publishers, but also those published by the five society and specialty publishers added to the top 10.

This study showed possibilities for how further studies could be conducted, if other journal-related information is combined with the unique journal list generated herein. The expected follow-up studies will address issues such as the article processing charges in open access journals, the prices of subscription journals, and the estimated market share by publisher. However, the lack of standardization of journal names was a problem and it took a considerable amount of time to check the same journals from various journal lists. Therefore, it is imperative to establish an internationally standardized journal database covering world journals to maintain accurate journal information as well as to enable reasonable evaluations of journals and publishers.

Notes

Conflict of Interest

No potential conflict of interest relevant to this article was reported.

Data Availability

Most of the raw data in this paper are various indicator values of JCR, which is sold as a paid commercial database; therefore, sharing is not available. Please contact the corresponding author for raw data availability.

Supplementary Material

Supplementary file is available from: https://doi.org/10.6087/kcse.209.

Largest publisher imprints referenced by the Scopus classification